Over the past few years, there has been dramatically increased media coverage of solar flares, and the effects they can have on the Earth, primarily on our electrical distribution and communications systems. The emphasis has been on the ability of the flares to cause geomagnetic storms on the Earth, which then can induce currents in our electrical power grids, causing them to go offline from damage. There has been a tremendous amount of fear instilled in the public by the hundreds, if not thousands, of news articles that appear every time there is a solar flare.

Those of us who are shortwave listeners or amateur (ham) radio operators are typically familiar with solar flares, and some of the effects they can cause. To summarize:

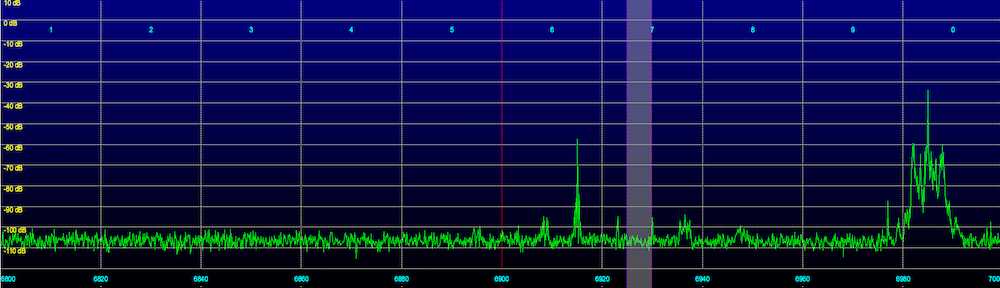

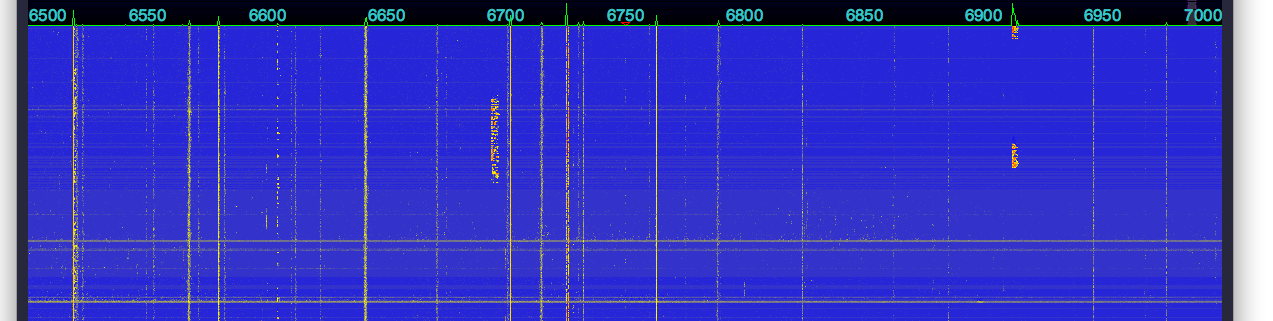

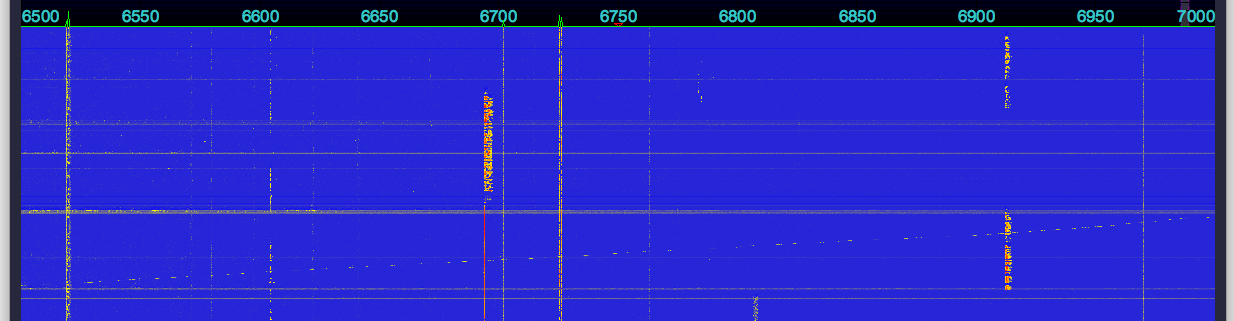

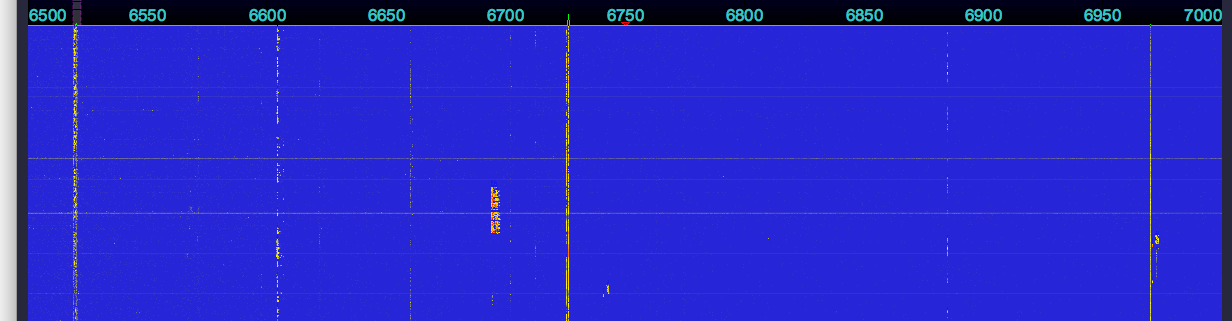

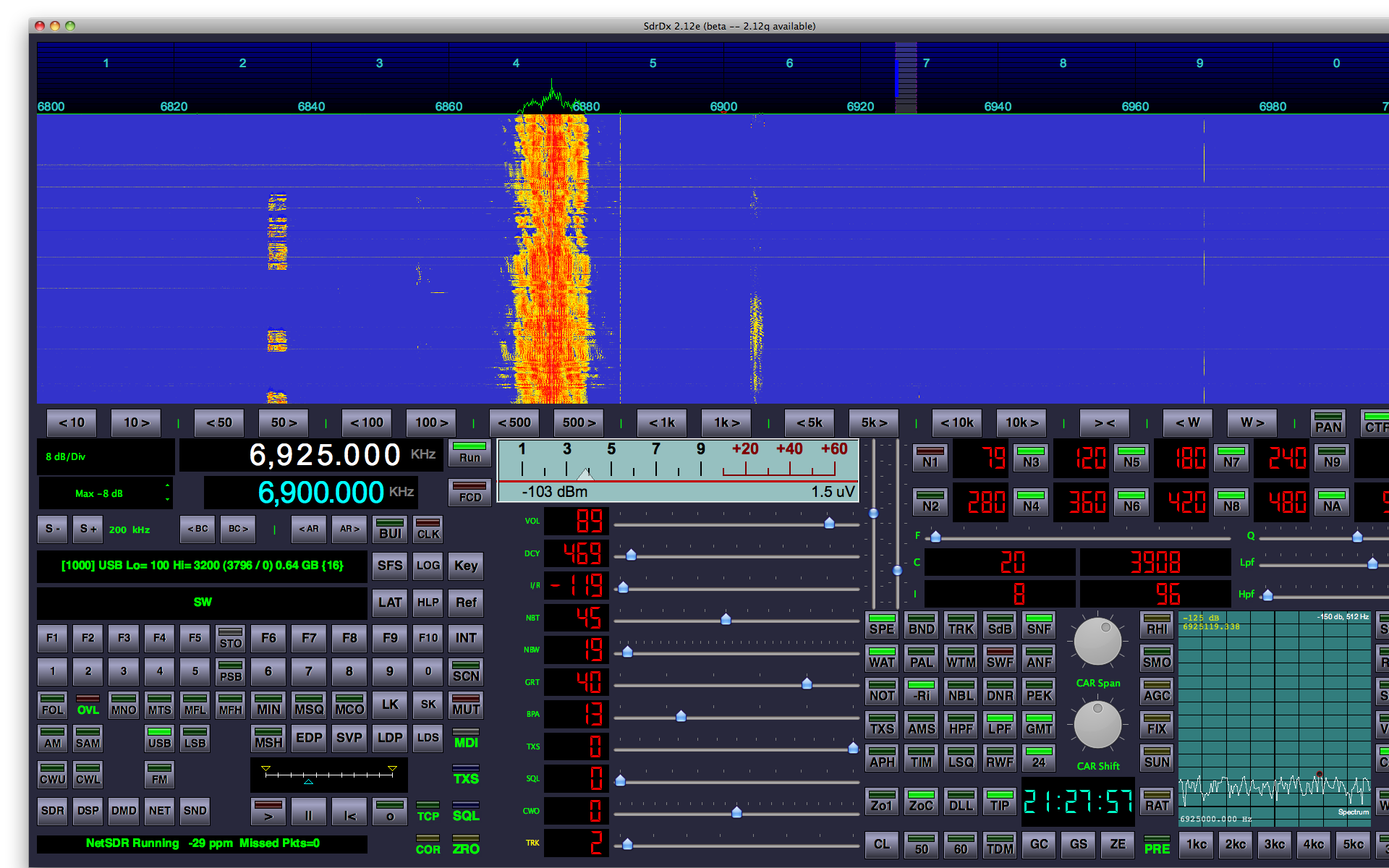

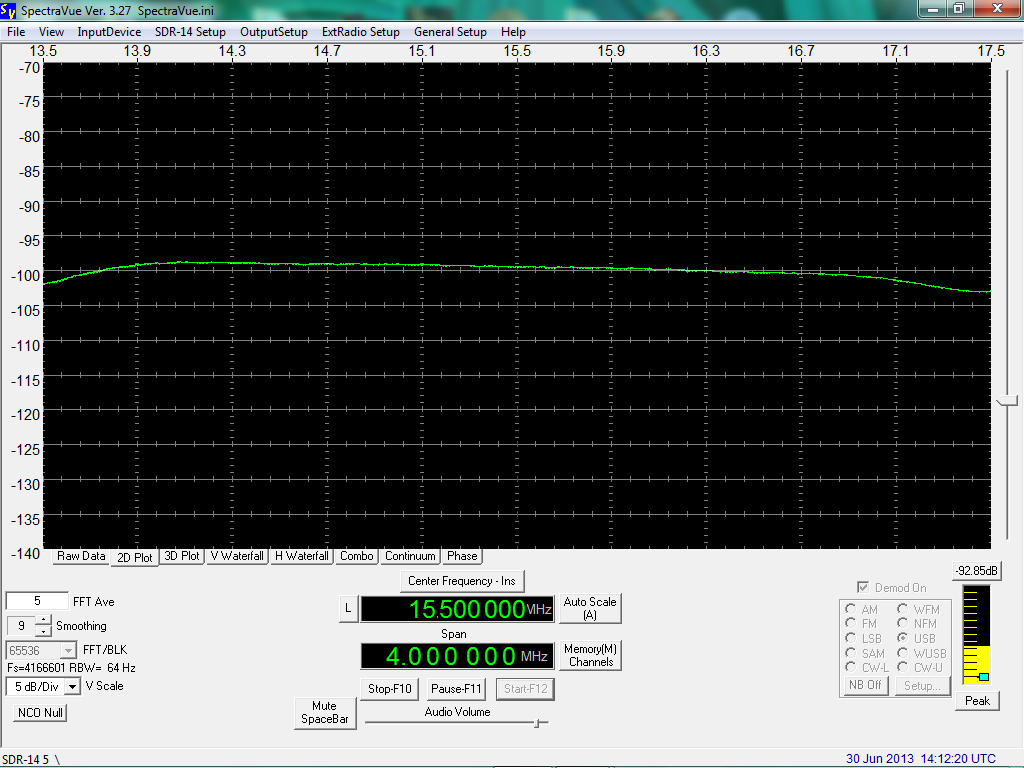

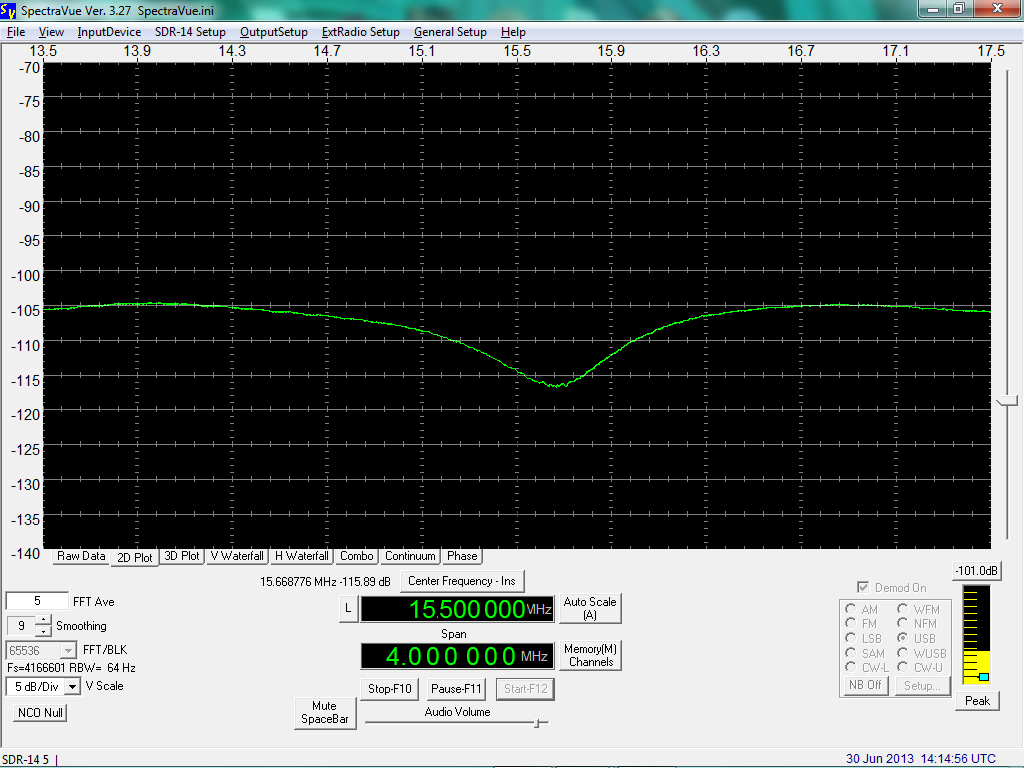

A solar flare is a sudden brightening of a portion of the Sun’s surface. A solar flare releases a large amount of energy in the form of ultraviolet light, x-rays and various charged particles, which blast away from the solar surface. The x-rays can have an almost immediate effect on the Earth’s ionosphere, the layer of charged particles above the atmosphere, which allows for distant reception of shortwave radio signals. I discuss the effects of x-rays on the ionosphere this earlier article. Energetic solar flares can cause what are known as radio blackouts, where all of the shortwave spectrum appears to be dead, with few or no stations audible. Other communications bands, such as AM / medium wave (MW between 526-1705 kHz), FM, TV, and cellular phones are not affected. Just shortwave. Also, the portion of the Sun producing the flare must be roughly facing the Earth to have an effect, and only the sunlit portion of the Earth is affected.

The solar flare can also cause a Coronal Mass Ejection (CME), which is a burst of energy, plasma, particles, and magnetic fields. The CME typically takes one to three days to reach the Earth. When it does, it can cause a geomagnetic storm, which is a disruption of the Earth’s magnetosphere. The magnetosphere is a region of space surrounding the Earth, where the magnetic field of the earth interacts with charged particles from the sun and interplanetary space.

The CME can produce very high radiation levels, but only in outer space. If you’re an astronaut on the International Space Station, this could be a concern. If not, you don’t really have much to worry about. High altitude airline flights can result in somewhat higher than usual radiation doses, but high airline flights always result in higher than usual radiation doses, due to less atmosphere protecting you from cosmic radiation. You might just get a little more especially if you fly over the North Pole. Or over the South Pole, but I don’t think there are too many of us who do that.

The effects of a geomagnetic storm:

The Earth’s magnetic fields are disturbed. This can cause compass needles to deviate from their correct direction towards the poles, and has been frequently mentioned in medieval texts.

Communications systems can be impacted. As with solar flares, shortwave radio is most affected. AM can also be affected to some degree. FM TV, and cell phones are not affected.

Back in 1859, there was a large geomagnetic storm, often called the Carrington Event because the solar flare that caused it was seen by British astronomer Richard Carrington. The effects were dramatic. Aurora were seen as far south as the Caribbean. Telegraph lines failed, and some threw sparks that shocked operators. Storms of this magnitude are estimated to occur about every 500 years. Other very large geomagnetic storms occurred in 1921 and 1960, although neither was the magnitude of the 1859 storm. The term “Carrington Event” has now come to mean an extremely large geomagnetic storm that could cause devastating damage to the communications and electrical systems around the world. But these forecasts are often based on the notion that, with more communications and electrical systems in place, we are much more reliant on these systems and vulnerable to disruption, meanwhile ignoring the fact that we better understand how geomagnetic storms cause damage, and what can be done to prevent it. Remember, this was 1859, and very little was even known about how electricity worked, let alone the effects of geomagnetic storms. This was in fact the first time that the relationship between solar flares and geomagnetic storms was established.

Communications satellites can be affected due to the higher radiation levels and unequal currents induced in various parts of the satellites. This could cause the satellites to temporarily malfunction, or even be damaged (which could affect FM, TV, and cell phone calls, which would otherwise be unaffected). As satellites are always in a high radiation environment, they are protected, and it would take very severe conditions to cause extensive damage.

Between 19 October and 5 November 2003 there were 17 solar flares, including the most intense flare ever measured on the GOES x-ray sensor, a huge X28 flare that occurred on November 4. These flares produced what is referred to as the Halloween Storm, causing the loss of one satellite, the Japanese ADEOS-2. Bear in mind that there are almost a thousand active satellites in orbit.

GPS navigation can also be affected, due to variations in the density of different layers of the ionosphere. This can cause navigation errors.

But the effect that, thanks to media hype, everyone is most concerned about is the possibility of a solar flare causing a geomagnetic storm that destroys the entire power grid, leaving virtually the entire United States without power for weeks or even months. The good news is that this is highly unlikely to happen.

Here’s the scenario: The geomagnetic storm causes currents to be induced in the wires that make up the long distance transmission lines that connect the various electrical power plants and users across the United States, aka the power grid. If these currents become large, they can damage equipment such as transformers, leading to widespread power outages.

And indeed this happened, on a much smaller scale, on March 13, 1989. A geomagnetic storm caused ground induced currents that severely damaged seven static compensators (devices that are used to regulate the grid voltage) on the La Grande network in the Hydro-Quebec, Canada power grid, causing them to trip or shut down automatically. The loss of the compensators allowed fluctuations in the grid voltage, causing damaging to other devices. The net result was that over 6 million people in Quebec and the Northeastern United States were without power for about 9 hours. Another million were without power after the 9 hours. Parts of Sweden also had electrical power disruptions.

While being without power for 9 hours, or even several days, sounds dreadful, especially in this age of constant communications, it does happen routinely. Hurricanes and tropical storms often cause millions to lose power outage each year, as do snowstorms, ice storms, and thunderstorms. Even heat waves have caused massive blackouts. I was without power for a week after Hurricane Isabel in 2003. The concern with an extreme geomagnetic storm, such as a repeat of the Carrington Event, is that critical components such as large transformers could be damaged, which can take time to repair or replace. And there’s the fear that widespread damage to the electrical grid could result in more components being damaged than spare parts exist, causing even longer delays until they can be replaced.

In the two decades since the 1989 event, more protective devices have been installed, and electrical transmission line operators are more aware of the damage caused by induced currents from geomagnetic storms. Preventative measures, such as temporary blackouts for several hours until conditions stabilize, can prevent much of the damage from a large geomagnetic storm. The advanced warning of geomagnetic storms now possible due to the satellites that are continuously monitoring the Sun and the Earth’s geomagnetic field can give electrical transmission line operators the advanced warning they need to take preventative measures.

Also, the 1989 event occurred in Quebec, which is at a very northern latitude. Geomagnetic storms tend to be stronger near the poles, and less severe as you move towards the equator (much like how the aurora is commonly seen near the poles, but not elsewhere).

It’s also worth noting that there are actually three electrical grids in the United States: an Easter, Western, and Texas grid. They are not currently connected to each other, although there are discussions to do so.

Finally, while a repeat of the Carrington Event is possible, it is extremely unlikely (remember, they are thought to occur about once every 500 years). There are far more important things to plan for, such as blizzards, hurricanes, tornadoes, and even severe thunderstorms, which routinely do occur. It is certainly more prudent to prepare for events like these, by keeping batteries, portable radios, canned food, and jugs of water on hand, than to worry about an event that probably won’t happen again for several hundred years.

So, why all the media frenzy and public concern over solar storms?

First, the Sun operates on a roughly 11 year solar cycle. Solar activity, including the appearance of sunspots and solar flares, peaks about every 11 years, and then fades until the next solar peak. There’s a solar peak occurring right about now. The last one was in 2001. This was before Facebook, Twitter, and everyone spending several hours a day on the internet, obsessing about the crisis du jour. Or crisis of the year in this case. Back in 2001, very few people even knew there was such a thing as a solar flare, other than space scientists and ham radio operators.

Those of us who have been involved with radio related hobbies for some time are used to the 11 year cycle. As an SWL since 1978, I’ve witnessed several solar cycles. During a solar peak we get many more flares which disrupt reception, although the overall higher level of solar activity is actually beneficial to shortwave propagation. Plus, it’s more likely that we’ll get to see the aurora. Then things calm down for many years, until the next solar peak.

There’s also been a substantial increase in advocacy by scientists and other public officials for increased spending on solar flare / geomagnetic storm research and related programs. Obviously this is justified to some extent, as we are much more reliant upon technology, and even just electricity, than we were decades ago. Still, I wonder if things are being exaggerated just a wee bit. Government officials and those involved in research have a vested interest in increasing their budgets and staffs – it’s job security for them. I’m not suggesting any malice, pretty much everyone thinks their job is important, especially those in the scientific field. It’s human nature.

This increased advocacy has resulted in increased media coverage as well. I’m far less sympathetic here. The motto of many news organizations seems to be “If it bleeds, it leads”. Pretty much every time there’s a solar flare, there’s a flurry of news articles announcing impending doom. The titles are amusing, not only are there SOLAR STORMS, but also SOLAR HURRICANES, SOLAR TYPHOONS, and SOLAR TSUNAMIS. I haven’t heard of any SOLAR TORNADOES, but maybe next month. Invariably the articles describe how a solar flare can wipe out the entire power grid, sending us all back to the stone age. And this might be the one that does it! Of course, a day or two later, when the CME arrives and little happens other than poor shortwave radio listening and some enhanced Northern Lights, there’s no followup article. Although if there was, I’m sure it would state that while we dodged the bullet this time, the next flare will surely fry us all. And our iPhones.

Some examples:

Nasa scientists braced for ‘solar tsunami’ to hit earth

The Daily Telegraph disclosed in June that senior space agency scientists believed the Earth will be hit with unprecedented levels of magnetic energy from solar flares after the Sun wakes “from a deep slumber” sometime around 2013.

Cities blacked out for up to a year, $2 TRILLION of damage – with a 1 in 8 chance of solar ‘megastorm’ by 2014

Imagine large cities without power for a week, a month, or a year. The losses could be $1 to $2 trillion, and the effects could be felt for years.

‘A longer-term outage would likely include, for example, disruption of the transportation, communication, banking, and finance systems, and government services,’ the NRC report said, it was reported on Gizmodo.

‘It could also cause the breakdown of the distribution of water owing to pump failure; and the loss of perishable foods and medications because of lack of refrigeration.’

Solar Flare: What If Biggest Known Sun Storm Hit Today?

Repeat of 1859 space-weather event could paralyze modern life, experts say.

A powerful sun storm—associated with the second biggest solar flare of the current 11-year sun cycle—is now hitting Earth, so far with few consequences. But the potentially “severe geomagnetic storm,” in NASA’s words, could disrupt power grids, radio communications, and GPS as well as spark dazzling auroras.

The storm expected Thursday, though, won’t hold a candle to an 1859 space-weather event, scientists say—and it’s a good thing too.

If a similar sun storm were to occur in the current day—as it well could—modern life could come to a standstill, they add.

The news articles are bad enough, but I suspect the fact that 11 years ago no one saw articles like this, or even knew solar flares existed, has convinced a lot of the public that solar flares (of this magnitude and frequency of occurrence) are a new phenomena. It probably doesn’t help that this is the year 2012, and we’ve had the Mayan 2012 END OF THE WORLD nonsense to deal with for the last decade or so. I wonder if anyone has retconned Mayan history into them having a solar observatory and been aware of the 11 year solar cycle, and how it would peak in 2012, destroying the Earth. Maybe they even had an x-ray satellite in orbit. I bet the aliens that helped them build their pyramids left them one. The grays are helpful, like that.

Perhaps the most ironic part of this entire saga is that the 2012 solar cycle peak is forecast to be extremely low. Here’s the latest forecast and current progress through the cycle, click to enlarge it:

The peak smoothed sunspot number (SSN) is forecast to be about 60, vs the 120 for the previous cycle. The lower peak SSN means lower overall solar activity. That means fewer flares, and they should (overall) be less severe. The peak is also forecast to be in 2013, so I’m not sure how that works out for all the 2012 Doomsayers.

To put this further into context, here’s a graph showing all the previous solar cycles:

The red arrow points to the cycle peaking in 1928, the forecast at the time (2009) was that the cycle we’re in now would be similar to that one, it’s since turned out that activity is even lower.

The largest peak is Cycle 19, from the 1950s. Many older ham radio operators have fond memories of Cycle 19, when radio propagation conditions were excellent. They were hoping for a repeat with Cycle 24, but that is clearly not the case. And Cycle 25 is currently being forecast by some to be even lower than Cycle 24, although it’s not worth putting much, if any, stock into long range solar cycle predictions. Predictions for our current cycle (24) from just a few years ago had it being as strong as, or even stronger than, the previous cycle, which is clearly not the case.

The period marked as the Maunder Minimum on the above graph was a period of extremely low solar activity around the late 17th century. Very few sunspots were noted during this time period.

While we are indeed entering the peak of a solar cycle, which means more solar flares (and more powerful flare), which can have impacts on the Earth, I believe the historical evidence shows that the doomsday scenarios proposed by many alarmists are not warranted. I would suggest checking with various websites such as http://www.spaceweather.com/ to keep track of when a solar flare has occurred. Not to panic that the end is near, but to know when to go outside and look at the Northern Lights. They can be quite beautiful.